Getting Started with Elasticsearch

Elasticsearch is a powerful, distributed search engine that companies like Github and Soundcloud use to power their search capabilities. This crash course focuses on bootstrapping a local Elasticsearch instance using college basketball rankings to understand how to get started and how it works.

Elasticsearch is a powerful, distributed search engine that companies like Github and Soundcloud use to power their search capabilities. This crash course is a high level overview and focuses on bootstrapping a local Elasticsearch instance using college basketball rankings while answering three specific questions:

- What is Elasticsearch and why is it useful?

- How is Elasticsearch architected?

- How can I get started with it?

Introduction

Elasticsearch is an open-source distributed search engine built on Apache Lucene. It was created by the Elastic Company in 2010 and powers search for hundreds of tech companies, some of whom you've probably heard of: Foursquare, Github and Soundcloud. It the 'E' in the powerful ELK Stack and as a search engine, Elasticsearch has numerous capabilities and benefits:

- Distributed - Elasticsearch distributes the search workload to various nodes or servers. If one node fails, the system will continue to process requests and automagically re-organize itself. This (1) helps avoid having a single point of failure, (2) provides high availability, (3) takes load off your application servers and other services.

- Schemaless JSON Storage - Elastic has a schemaless NoSQL datastore which means right out of the gate, you do not need to specify columns or data types for it to work. It is smart enough to figure most of that out, altough down the road you will want to specify objects and data types to increase performance. Elastic stores stores everything as JSON.

- REST API - You access your Elasticsearch instance through HTTP requests through its built-in REST API. The API comes with your instance out of the gate, making it easy to send and receive data.

- High Performance - Elastic and its underlying technology, Lucene were built to handle full-text search and complex querying. You will be amazed how wicked fast you can search large amounts of data.

Elasticsearch is great for searching large amounts of data (gigabytes and terabytes) and will probably do everything you need and then some. However, these benefits come with a learning curve and you need to understand the inns and outs of the Elasticsearch DSL to properly fine-tune your search engine. The answer of whether you should use Elasticsearch or another service like Postgres' full-text search (it's pretty damn good), comes down to the experience you plan to provide your users. Answering a few questions might help you decide:

- Is search functionality core to the user experience?

- How much data will users be searching through?

- Do you have the time and resources to implement robust search functionality?

If you need a full-featured, high-performance search engine with high availability at scale, Elasticsearch is probably right for you.

Elasticsearch Architecture + Terminology

First, let's get cover some basic terminology.

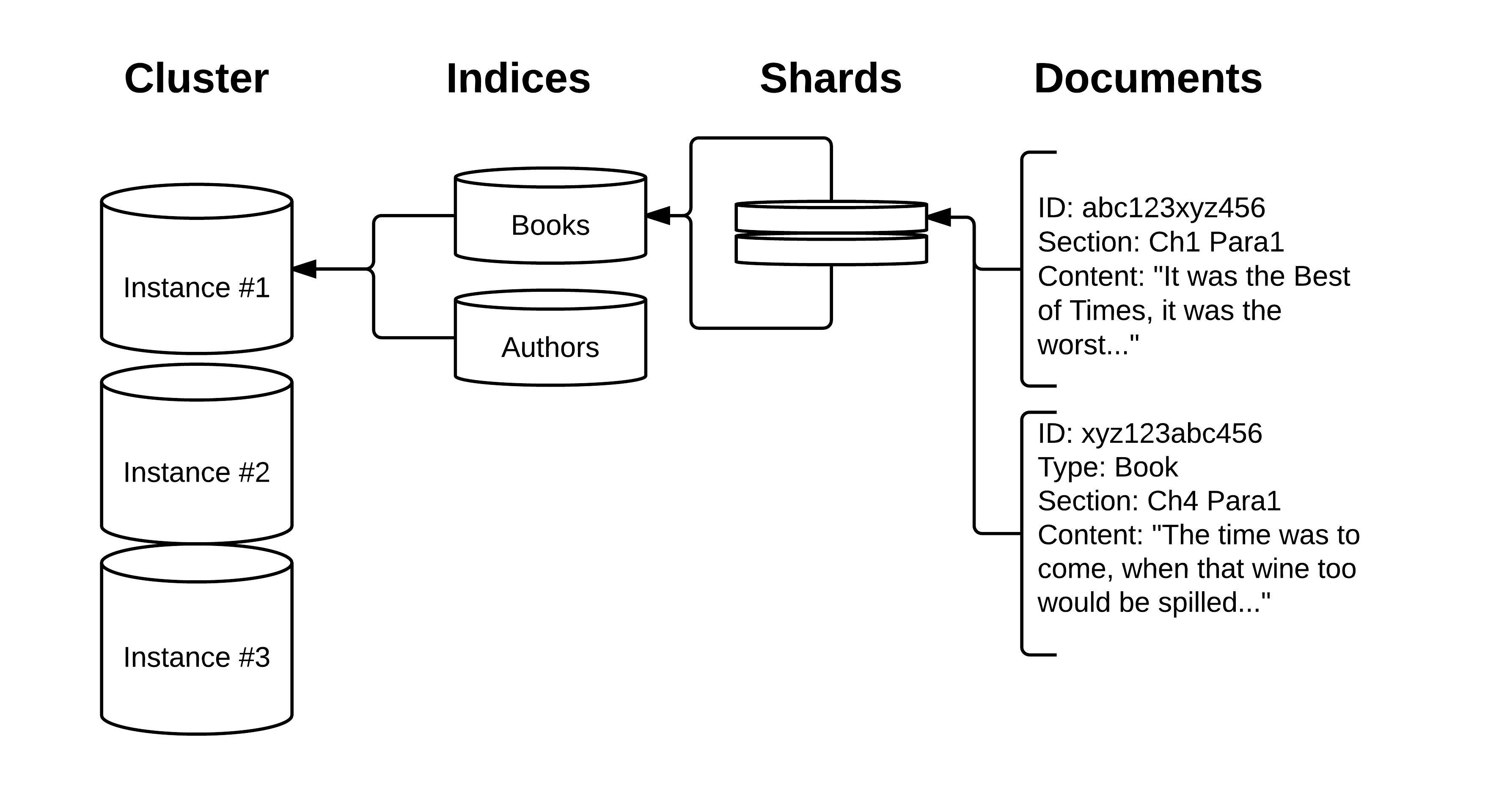

Each Elasticsearch cluster or search engine is distributed, allowing you to add additional nodes to scale when needed. When new nodes are added to a cluster, the cluster re-organizes itself auto-magically to distribute work. Within the cluster, there is one master node, which manages the other nodes in the cluster. A little terminology might help:

- Cluster: Group of nodes with the same name that are working together.

- Node: A single Elasticsearch instance or server.

- Index: A collection of similar documents or shards.

- Type: A a logical categorization of documents within an index

- Shard: A slice of an index and a container of data and metadata.

- Document: The most basic storage unit, a JSON object.

To use database terminology as a metaphor, think of each node as a database and each index is a table within that database. Following that train of logic, a shard might be a column and a document is an individual row of data:

- Node >> Database

- Index >> Table

- Shard >> Column

- Document >> Row

For example, this is how a book application might use Elasticsearch:

While this metaphor is not perfect, hopefully the concept of distributed indices makes a little more sense now.

Searching, aka Querying and Filtering

There are two primary uses of the Elastic DSL - querying and filtering which happen primarily against a specific index. Filtering is used for finding documents based on an exact set of parameters while a filter while querying is used for free-text search. Filtering would be most useful when asking questions with an exact answer:

- Was this blog post written in 2015?

- Did Mike write this blog post?

- What are all the published blog posts written in 2015 by Mike?

- Which are the top 10 most read blog posts?

Queries are similar to filters but instead calculate a relevancy score to determine the best matching result. Full text search is probably the highest use case here - think Google search.

I don't want to go any deeper on search as you drop into a rabbit-hole quickly but just know Elasticsearch's API is incredibly powerful and it can probably do what you need, and then some. Now that we have a high level overview, let's jump into setting up a local instance.

Elasticsearch Setup Guide

In this example setup, we are going to build a simple college basketball rankings index that we will build some basic queries with. The initial

- Download Elasticsearch from the Elastic Website.

- If you don't have Java installed, you will need a recent development kit. Elasticsearch will refuse to start if a known-bad version of Java is used.

- Once everything is installed, start your elasticsearch server from the root of your elasticsearch directory with:

bin/elasticsearch - Verify server is running with

curl -X GET 'http://localhost:9200/'or visit this path in your browser.

If your server is running properly, you should receive a JSON response that should look similar to this:

{

"status" : 200,

"name" : "Foreigner",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "1.6.0",

"build_hash" : "cdd3ac4dde4f69524ec0a14de3828cb95bbb86d0",

"build_timestamp" : "2015-06-09T13:36:34Z",

"build_snapshot" : false,

"lucene_version" : "4.10.4"

},

"tagline" : "You Know, for Search"

}

Cool. Let's see what we are working with before we load in some data and view some information on our Elasticsearch instance. Run the following from your command line or visit the path in your browser:

curl -X GET'localhost:9200/_cat/?pretty=true'You should receive a response that looks something like this:

=^.^=

/_cat/allocation

/_cat/shards

/_cat/shards/{index}

/_cat/master

/_cat/nodes

/_cat/indices

/_cat/indices/{index}

/_cat/segments

/_cat/segments/{index}

/_cat/count

/_cat/count/{index}

/_cat/recovery

/_cat/recovery/{index}

/_cat/health

/_cat/pending_tasks

/_cat/aliases

/_cat/aliases/{alias}

/_cat/thread_pool

/_cat/plugins

/_cat/fielddata

/_cat/fielddata/{fields}

That GET request the basic request you can make to the cat API. The response lists all the various paths for settings and overview information for various pieces of your elasticsearch instance.

Two extra pieces of advice moving forward: append ?pretty=true to the end of each URL to provide cleaner response formatting and appending v as a query parameter requests verbose output, which will provide headers for tables and a little more detail.

Next, let's take a look at all of our indices:

curl -X GET 'http://localhost:9200/_cat/indices?pretty=true&v'

We don't get a response back because we don't have any indices, so let's create one:

curl -X PUT 'http://localhost:9200/teams?pretty=true'

You should receive the following response back: { "acknowledged" : true }

Now let's take a look at our indicies again:

curl -X GET 'http://localhost:9200/_cat/indices?pretty=true&v'

health status index pri rep docs.count docs.deleted store.size pri.store.size

yellow open teams 5 1 0 0 575b 575b

Cool. Elasticsearch sees our 'teams' index. Now let's take a clooser look at our newly created index:

curl -X GET 'http://localhost:9200/teams?pretty=true&v'

Your response should look something like this:

{

"teams" : {

"aliases" : { },

"mappings" : { },

"settings" : {

"index" : {

"creation_date" : "1434552299933",

"number_of_shards" : "5",

"number_of_replicas" : "1",

"version" : {

"created" : "1060099"

},

"uuid" : "jUxo1CR3Qm--O_0lTHZecg"

}

},

"warmers" : { }

}

}

This response shows all of the information Elastic has about our teams index, which is only meta data and default configurations like the number of shards at this point. Next, we are going to load some data into our index. For the purposes of this guide, we will only load a few documents in. When creating documents, you need to specify a type and in this instance I arbitrarily chose 2015 as this was the year of the RPI ratings. In practice, you will want to determine the categorial types based on the structure of your data in a process which Elasticsearch calls mapping. Now, let's add some team documents to our team index:

curl -X POST 'http://localhost:9200/teams/2015/' -d '{"rpi":1,"name":"Kentucky","conference":"SEC","record":"34-0","wins":34,"losses":0}'

curl -X POST 'http://localhost:9200/teams/2015/' -d '{"rpi":2,"name":"Villanova","conference":"BE","record":"32-2","wins":32,"losses":2}'

curl -X POST 'http://localhost:9200/teams/2015/' -d '{"rpi":3,"name":"Kansas","conference":"B12","record":"26-8","wins":26,"losses":8}'

curl -X POST 'http://localhost:9200/teams/2015/' -d '{"rpi":4,"name":"Wisconsin","conference":"B10","record":"31-3","wins":31,"losses":3}'

curl -X POST 'http://localhost:9200/teams/2015/' -d '{"rpi":5,"name":"Arizona","conference":"P12","record":"31-3","wins":31,"losses":3}'

You should receive a response that looks like this with specifically created: true:

{"_index":"teams","_type":"2015","_id":"AU4EUrCxYcgijd8NQKyx","_version":1,"created":true}

Now that we have some data in there, let's run a blank search now:

curl -X GET 'http://localhost:9200/teams/_search?pretty=true'

And now we get a big response back:

{

"took" : 49,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

},

"hits" : {

"total" : 5,

"max_score" : 1.0,

"hits" : [ {

"_index" : "teams",

"_type" : "2015",

"_id" : "AU4EU_AbYcgijd8NQKy0",

"_score" : 1.0,

"_source":{"rpi":4,"name":"Wisconsin","conference":"B10","record":"31-3","wins":31,"losses":3}

}, {

"_index" : "teams",

"_type" : "2015",

"_id" : "AU4EUrCxYcgijd8NQKyx",

"_score" : 1.0,

"_source":{"rpi":1,"name":"Kentucky","conference":"SEC","record":"34-0","wins":34,"losses":0}

}, {

"_index" : "teams",

"_type" : "2015",

"_id" : "AU4EU-HEYcgijd8NQKyz",

"_score" : 1.0,

"_source":{"rpi":3,"name":"Kansas","conference":"B12","record":"26-8","wins":26,"losses":8}

}, {

"_index" : "teams",

"_type" : "2015",

"_id" : "AU4EVAkGYcgijd8NQKy1",

"_score" : 1.0,

"_source":{"rpi":5,"name":"Arizona","conference":"P12","record":"31-3","wins":31,"losses":3}

}, {

"_index" : "teams",

"_type" : "2015",

"_id" : "AU4EU9AAYcgijd8NQKyy",

"_score" : 1.0,

"_source":{"rpi":2,"name":"Villanova","conference":"BE","record":"32-2","wins":32,"losses":2}

} ]

}

}

Awesome! Looking through the results, we get back a JSON object that has some meta-data on the search results including how long the query took and the number of results. Looking at the search results we the record along with the id that our instance assigned to each document and a relevancy score. I won't go into relevancy score but this becomes important when you to add full-text search.

Now let's try finding the University of Wisconsin. We are going to use the most basic match query parameter, using the word 'Wisconsin' to see what happens.

curl -X GET 'http://localhost:9200/teams/_search?pretty=true' -d '{"query": {"match": {"name":"Wisconsin", "fuzziness": 3}}}'

And now our response:

{

"took" : 2,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

},

"hits" : {

"total" : 1,

"max_score" : 0.30685282,

"hits" : [ {

"_index" : "teams",

"_type" : "2015",

"_id" : "AU4EU_AbYcgijd8NQKy0",

"_score" : 0.30685282,

"_source":{"rpi":4,"name":"Wisconsin","conference":"B10","record":"31-3","wins":31,"losses":3}

} ]

}

}

Our search query only returns one result for Wisconsin University because this query parameter is looking for an exact match on the word Wisconsin. Let's try a fuzzy search now on teams from the Big 12 Conference using 'B12'. We're also going to exit our query params to pass in a extra parameter to make our search extra fuzzy:

curl -X GET 'http://localhost:9200/teams/_search?pretty=true' -d '{"query": {"fuzzy": {"conference": {value:"B12", "fuzziness":2}}}}'

{

"took" : 66,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

},

"hits" : {

"total" : 3,

"max_score" : 0.993814,

"hits" : [ {

"_index" : "teams",

"_type" : "2015",

"_id" : "AU4EU-HEYcgijd8NQKyz",

"_score" : 0.993814,

"_source":{"rpi":3,"name":"Kansas","conference":"B12","record":"26-8","wins":26,"losses":8}

}, {

"_index" : "teams",

"_type" : "2015",

"_id" : "AU4EVAkGYcgijd8NQKy1",

"_score" : 0.993814,

"_source":{"rpi":5,"name":"Arizona","conference":"P12","record":"31-3","wins":31,"losses":3}

}, {

"_index" : "teams",

"_type" : "2015",

"_id" : "AU4EU_AbYcgijd8NQKy0",

"_score" : 0.3068528,

"_source":{"rpi":4,"name":"Wisconsin","conference":"B10","record":"31-3","wins":31,"losses":3}

} ]

}

}

Now our search for B12 returned three results including documents with P12 and B10 conferences. Without going to deep into fuzzy search specifics, we are receiving extra search results because we specified a larger range of fuzziness in the search parameters.

Wrapping Up

This barely scratches the surface of what Elasticsearch can do but it hopefully you learned enough to know how it can be used and can start playing around with it. Also, I put together an Elasticsearch cheat sheet with some commonly used endpoints for reference. A later blog post will detail how to integrate Elasticsearch into a Rails application and go over how to use Postgres Full-Text search as well.